I was recently asked by Julie Swinsco, the Photography tutor at Cadbury College, Birmingham, to come in and talk to the AS Photography students at the end of their year. Getting an artist in to give a different perspective than the teachers is something they try and do for every subject in the art/crafts department and I was rather honoured to be asked, considering the number of much more experienced photographer-artists available.

I did two lessons for three classes of students - six in all. The first on blogging as part of the artistic process, specifically searching the Internet for inspiration and using Tumblr as a scrapbook , which I may write about on the ASH-10 blog later. The second was a three hour workshop where the students built a basic TTV contraption, went out and took photos, reviewed and processed them in Photoshop and shared the results with the class.

I started with a quick overview of some of the contraptions people had shared in the TTV Flickr group, talked through how to make a contraption by measuring the optimal distance for your lens and then let them go at it. Within an hour they’d all built a ‘trap and taken some photos which, after the break, I showed them how to process. Having seen what they could achieve they then went back out for another go before a brief critique by me of their work in the final ten minutes.

There’s a great post by Sarah NB who was in the first workshop and you can see all the photos from the second and third workshops here (we didn’t think to get them to share their jpegs in the first one…). Julie and I also took photos of the students working which you can find in this set.

Like all good lessons there was a not-so-hidden depth to these workshops. I didn’t just want them to build a lens out of an old camera and take some freaky photos. I wanted them to use this exercise to think about what photography is on a number of levels.

Some of the points I was trying to drive home included:

Analogue manipulation. I emphasised that with my TTV photos I draw a distinction between processing, which happens on the computer, and manipulation, which happens in the contraption. This idea of effectively applying a Photoshop effect to the image before it’s even taken helps the students think about the mechanics of the camera and the physics of light moving through it.

Slow Down. In my previous talk I’d discussed how digital photography in the early 2000s had given me the freedom to become a reasonable photographer by removing the financial barrier of film processing, but mastering the manual Nikon FM2 film camera had helped me become a good one by forcing me to slow down and look at what I was shooting. The unwieldily nature of the TTV contraption is similar cure for the Auto disease of modern cameras, doubly so as Auto setting never really works with TTV…

Digital cameras can be stupid. When you stick a modern DLSR into a cardboard tube and ask it to take a photo of the viewfinder of a vintage camera, it gets really confused. The factory settings are designed for the most common types of photography - portraiture, landscapes, parties, etc - and they’re really good at getting a great image in those situations. But take them out of that comfort zones and they loose it. TTV helps the photographer explore life outside of Auto, looking at things like Spot Metering and learning how to switch off the “helpful” features buried in the menus. Having taken this step, applying more control over the camera to “normal” photography can follow.

Working with limitations. Some of the students got wonderfully clear photos from their contraptions. Some of them didn’t and looked a bit dejected. I tried to make it clear that this wasn’t a problem. The point was to take the tools and conditions you had and make the most of them. This picture by Ashleigh Kearney-Williams, who I suspect thought she had “failed” at TTV, I highlighted in the review:

She couldn’t get the camera to focus on the viewfinder, probably because of some auto setting confusing things, but the resulting image was lovely, like a scene from a horror movie. I wanted to communicate the idea the perfect photos, the sort that DLSR cameras are designed to take, can be artistically boring, but placing restrictions and barriers in the way can force you to think creatively and produce things you wouldn’t otherwise have thought to do.

A few times during the lessons students mentioned mobile apps like Hipstamatic and Instagram which mimic vintage photography and some of the effects of TTV, and yes, if you want that effect it’s easy to produce it on a computer. I don’t have a problem with that in the slightest. What interests me about taking photos through a TTV contraption isn’t the effect on the image but how it affects the act of creating that image, the act of photography itself.

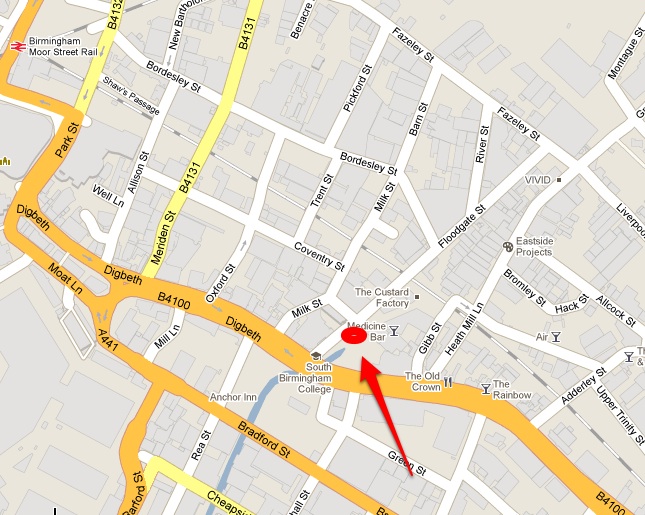

I’m hoping to run a TTV workshop with FizzPop, open to the public of all ages, later this summer. If you’re interested drop me an email and I’ll keep you posted.